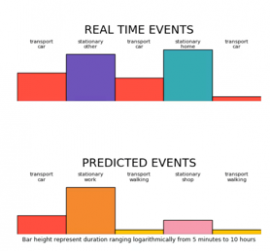

Usage based insurance solutions where smartphone sensor data is used to analyze the driver’s behavior are becoming prevalent these days. However, a major shortcoming of most solutions on the market today, is the fact that trips where the user was a passenger, e.g. taxi trips, get included in...

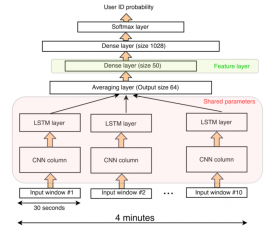

Continue reading » September 25, 2017 Vincent SpruytOtherNo Comment At Sentiance, we use machine learning to extract intelligence from smartphone sensor data such as accelerometer, gyroscope and location. We’ve been doing this for quite a while now, and are very proud on our state-of-the-art results regarding sensor based activity detection, map matching, driving behavior, venue mapping and...

Continue reading » April 26, 2017 Vincent SpruytOther1 Comment In this article, we discuss how Principal Component Analysis (PCA) works, and how it can be used as a dimensionality reduction technique for classification problems. At the end of this article, Matlab source code is provided for demonstration purposes. In an earlier article, we discussed the so called...

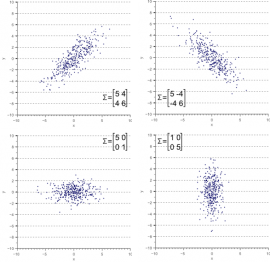

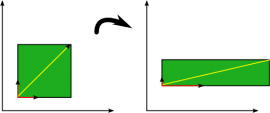

Continue reading » May 16, 2014 Vincent SpruytFeature extraction14 Comments In this article, we provide an intuitive, geometric interpretation of the covariance matrix, by exploring the relation between linear transformations and the resulting data covariance. Most textbooks explain the shape of data based on the concept of covariance matrices. Instead, we take a backwards approach and explain the...

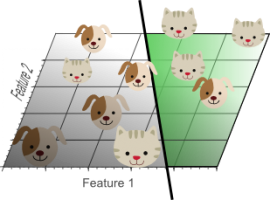

Continue reading » April 24, 2014 Vincent SpruytLinear algebra47 Comments In this article, we will discuss the so called ‘Curse of Dimensionality’, and explain why it is important when designing a classifier. In the following sections I will provide an intuitive explanation of this concept, illustrated by a clear example of overfitting due to the curse of dimensionality....

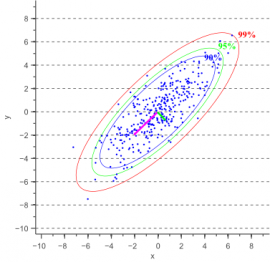

Continue reading » April 16, 2014 Vincent SpruytFeature extraction52 Comments In this post, I will show how to draw an error ellipse, a.k.a. confidence ellipse, for 2D normally distributed data. The error ellipse represents an iso-contour of the Gaussian distribution, and allows you to visualize a 2D confidence interval. The following figure shows a 95% confidence ellipse for...

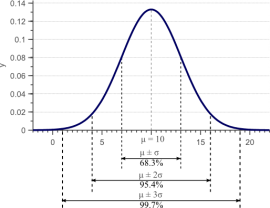

Continue reading » April 3, 2014 Vincent SpruytStatistics62 Comments In this article, we will derive the well known formulas for calculating the mean and the variance of normally distributed data, in order to answer the question in the article’s title. However, for readers who are not interested in the ‘why’ of this question but only in the...

Continue reading » March 7, 2014 Vincent SpruytStatistics18 Comments Eigenvectors and eigenvalues have many important applications in computer vision and machine learning in general. Well known examples are PCA (Principal Component Analysis) for dimensionality reduction or EigenFaces for face recognition. An interesting use of eigenvectors and eigenvalues is also illustrated in my post about error ellipses. Furthermore,...

Continue reading » March 5, 2014 Vincent SpruytLinear algebra20 Comments